Welcome to the 4,178 (!!!) new members of the curiosity tribe who have joined us since Friday. Join the 84,638 others who are receiving high-signal, curiosity-inducing content every single week.

Today’s newsletter is brought to you by LMNT!

LMNT is my healthy alternative to sugary sports drinks.

I work out a lot and pay close attention to what I put in my body (other than the occasional whiskey). That means that I reject all the standard sports drinks and their sugar-filled formulas.

LMNT is a tasty solution—an electrolyte drink mix with everything you need and nothing you don't. That means a science-backed electrolyte ratio with no sugar, no coloring, no artificial ingredients, or any other junk.

Get your free LMNT Sample pack below (you only cover the cost of shipping). You’re going to love it.

Today at a Glance:

Cognitive biases are systematic errors in thinking that negatively impact decision-making quality and outcomes.

Combatting them relies first and foremost on establishing a level of awareness of the biases—both academically and practically.

Today’s deep dive covers five common cognitive biases that derail decision-making: Fundamental Attribution Error, Naïve Realism, the Curse of Knowledge, Availability Bias, and Survivorship Bias.

Dangerous Mental Errors

Humans are fascinating creatures.

We possess the capacity to accomplish some complex feat of technology and engineering, and subsequently fall victim to the most obviously flawed base logic. For a hyper-intelligent species, our thinking and decision-making patterns can be pretty fractured.

Many of these fractures fall into the category of cognitive biases—systematic errors in thinking that negatively impact decision-making quality and outcomes.

Importantly, these are typically subconscious, automatic errors. We are wired to take shortcuts in our decision-making—to be more efficient and effective in the wild—but shortcuts are a double-edged sword. Speed and efficiency can be great, but when we systematically misinterpret the data, signal, and information from the world around us, it dramatically impacts the consistency and rationality of our decisions.

Fortunately, we can fight back and regain—at least a modicum of—control over the quality of our decision-making.

In today’s piece, I’ll cover five common cognitive biases that derail decision-making. For each, I’ll provide a definition, example, and perspectives on how to fight back.

This will be Part I of a multi-part series on cognitive biases and logical fallacies—as it’s a topic that deeply impacts all of our careers and lives. Developing an awareness of these errors—and a plan to combat them—will give you a legitimate competitive advantage in all of your pursuits.

Let’s dive in…

Fundamental Attribution Error

Definition

Fundamental Attribution Error is the human tendency to hold others accountable while giving ourselves a break.

It says that humans tend to:

Attribute someone else's actions to their character—and not to their situation or context.

Attribute our actions to our situation and context—and not to our character.

In short: We cut ourselves a break, but hold others accountable.

Why do we do this? Well, as with many of the biases we will cover, it likely developed as a heuristic—a problem-solving or decision-making shortcut—in this case for simplifying the process and judgement around new human relationships.

From an evolutionary perspective, quickly attributing negative actions to character—rather than situation or context—may have kept you alive, as you’d be more likely to avoid that individual in future interactions to play it safe.

But in a modern context, it can create real problems—a failure to recognize or empathize with the context and situational factors impacting others is at the core of many societal and organizational issues.

Example

The workplace is a common breeding ground for Fundamental Attribution Error.

It’s easy to form perspectives on the character of colleagues and bosses based on small pieces of incomplete information.

If a colleague arrives late for work, they’re just lazy, right?

This is clearly a flawed line of thinking, as there are numerous factors that could have contributed to your colleague’s lateness.

The reality is that, in these instances, you are using limited information to create an overall picture of an individual. You’re seeing one square of a map and believing you know the map in its entirety.

How to Fight Back

You’ll never completely eliminate Fundamental Attribution Error, but you can limit its impact.

The first step is always awareness—keep it in mind, particularly as you build a body of experiences with new colleagues or acquaintances. This is when it’s most likely to strike.

Force yourself to slow down and evaluate the potential circumstances or situational factors that may be influencing an individual’s actions or behaviors.

You won’t always have the time to do so—shortcuts are often necessary and helpful—but with longer-term or important relationships, it’s worth the extra effort. You’ll build deeper, more trusting personal and professional bonds.

Naïve Realism

Definition

Naïve Realism is part of a broader category of so-called “egocentric biases” that are grounded in the reality that humans generally think very highly of themselves.

Specifically, Naïve Realism has two core pillars:

We believe that we see the world with perfect, accurate objectivity.

We assume that people who disagree with us must be ignorant, uninformed, biased, or stupid.

It often leads to a dangerous “bias blind spot”—a phenomenon in which we accurately identify cognitive biases in others, but are unable to identify them in ourselves.

Example

The most famous example of Naïve Realism is an experiment involving a highly-contested Dartmouth vs. Princeton football game.

After the game, fans of each side were asked to watch the film of the game and evaluate the performance of each team. Interestingly, depending on which team they supported, they saw a very different game.

Dartmouth fans perceived the number of Princeton infractions as much higher; Princeton fans perceived the number of Dartmouth infractions as much higher.

The groups were incapable of objectivity, despite vocalizing their objectivity to the researchers prior to the experiment.

They watched the same game, but saw a very different one.

How to Fight Back

Naïve Realism is a base level bias, so fighting back starts with base awareness and acceptance of our own flaws and biases.

A few other ideas:

Surround yourself with people who think differently than you. Force the issue.

Learn to embrace being wrong. It’s a common trait of highly-successful people, as being wrong means you are getting closer to the truth.

Question your own beliefs. Always ask what assumptions or experiences have contributed to those beliefs.

Fighting back against Naïve Realism isn’t easy, but it’s so damn important.

The Curse of Knowledge

Definition

Experts—or generally intelligent people—make the flawed assumption that others have the same background and knowledge on a topic as they do.

It makes them unable to teach or lead in an effective manner for those still coming up the learning curve. They are literally “cursed” with knowledge that prevents them from teaching or managing effectively.

The Curse of Knowledge is commonly seen with business leaders, professors, or “experts” of specific fields. They incorrectly assume the audience has a baseline level of knowledge on a given topic. It leads to frustration and cultural tension on both sides of the teacher-student relationship.

Example

Your new marketing manager assumes you—a recent college graduate—understand the complexities of search engine optimization and grows increasingly frustrated as it becomes clear that you are new to the subject matter and are unable to keep up with her on the plan.

The manager’s expertise at SEO makes her completely incapable of teaching.

How to Fight Back

As the teacher, always be aware of the basis of your understanding of a topic. If it’s based on years of accumulated expertise, you have to bring others up the learning curve prior to building upon it.

The ELI5 (“Explain It To Me Like I’m 5”) rule works well—assume you’re teaching the topic to a 5-year-old until proven otherwise. Leverage the Feynman Technique!

As a student, communicate openly on the gaps in your foundational understanding on a more advanced topic. If it’s in a group setting, chances are that someone else is feeling the same way and is just afraid to say it!

Availability Bias

Definition

Humans love their shortcuts.

We evaluate situations based on the most readily available data, which tends to be what can be immediately recalled from memory. Our minds perceive what can be immediately recalled as being of the utmost importance. We give ourselves too much credit.

Availability Bias is the tendency to massively overweight recent and new information in formulating opinions or making decisions.

Example

The best example of Availability Bias is the impact of the news cycle on our opinions, thinking, and decisions. Its persistent negativity cements a belief that the world is a dark, doomed place.

When exposed to consistent news stories about negative events, humans significantly overestimate the actual frequency and likelihood of these events taking place.

Our irrational fear of insanely low probability events—like terrorist attacks, shark attacks, plane crashes, and child abductions—is a clear example of this in action.

How to Fight Back

There are two key ways to fight Availability Bias:

Focus on base rates—the actual prevalence of an event or feature in a given sample. In the example of shark attacks, compare the number of shark attacks globally to the number of people who went in the ocean. Slow down and consider the actual numbers.

Learn to turn off the news. Paradoxically, watching more news might make you less well-informed about the world around you.

There are other tactics and strategies, but these two will get you a long way.

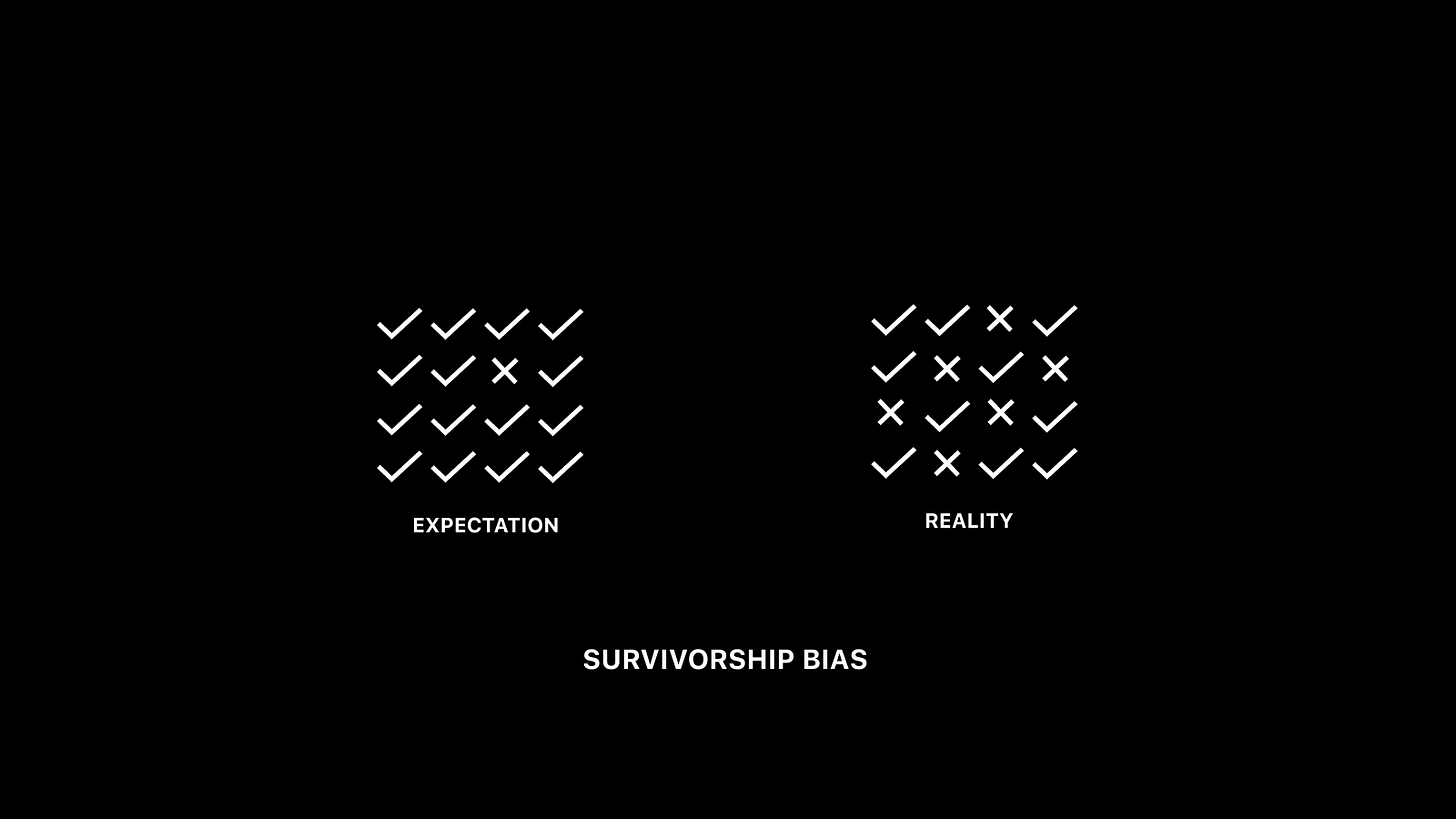

Survivorship Bias

Definition

We’ve all heard the phrase: History is written by the victors.

We all love studying victors—the epic stories of success, wealth, and fame. But unfortunately, studying and learning from "survivors”—while systematically ignoring "casualties”—creates material distortions in our conclusions.

Namely, we overestimate the base odds of success because we only read about successes.

Example

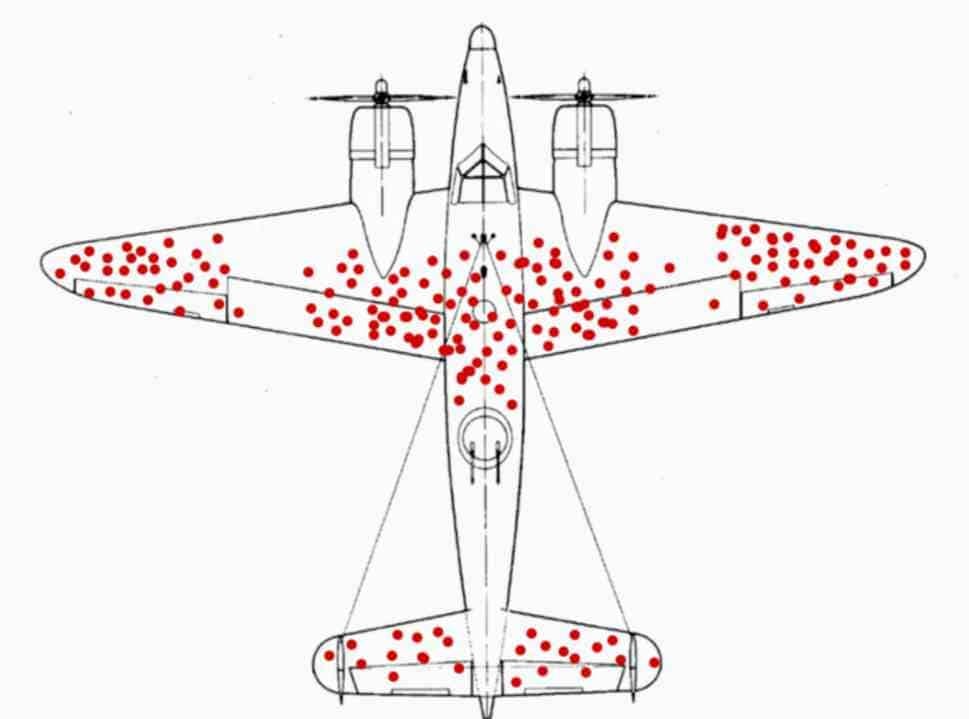

One of the famous examples of survivorship bias comes from World War II.

The U.S. wanted to add reinforcement armor to specific areas of its planes. Analysts plotted the bullet holes and damage on returning bombers, deciding the tail, body, and wings needed reinforcement.

But a young statistician named Abraham Wald noted that this would be a tragic mistake. By only plotting data on the planes that returned, they were systematically missing data on a critical, informative subset—the planes that were damaged and unable to return.

The "seen" planes had sustained damage that was survivable. The "unseen" planes had sustained damage that was not. Wald concluded that armor should be added to the unharmed regions of the survivors. Where the survivors were unharmed is where the planes were most vulnerable.

Based on his observation, the military reinforced the engine and other vulnerable parts, significantly improving the safety of the crews during combat. Wald had identified the survivorship bias and avoided its wrath.

How to Fight Back

Fighting back against Survivorship Bias involves two critical approaches:

Recognize the potential for “hidden” or “silent” evidence. What might you have learned from the casualties that is masked by the lack of data on them?

Focus on the base rates of success or failure—the underlying percentage of a population that have that given outcome.

Develop a keen awareness of the potential for missing evidence and its impact on your judgement of the probability of success.

Remember: For every entrepreneur who took out a second mortgage on their house and then became a billionaire, there are 100 who did the same and went bankrupt…

Conclusion

So to recap Part I of the mental errors series:

Fundamental Attribution Error: We cut ourselves a break, but hold others accountable. Fight back by slowing down, recognizing, and empathizing with the situational and contextual factors that may impact others.

Naïve Realism: We believe that we see the world with perfect, accurate objectivity and we assume that people who disagree with us must be ignorant, uninformed, biased, or stupid. Fight back by surrounding yourself with people with different beliefs and embracing being wrong.

The Curse of Knowledge: Experts make the flawed assumption that others have the same background and knowledge on a topic as they do. It makes them unable to teach or lead in an effective manner for those still coming up the learning curve. Fight back as a teacher by developing an awareness for the basis of your knowledge on a topic; fight back as a student by forcing that awareness in the teacher.

Availability Bias: We evaluate situations based on the most readily available data, which tends to be what can be immediately recalled from memory. Fight back by focusing on base rates of low probability events and turning off the news.

Survivorship Bias: Studying "survivors” and systematically ignoring "casualties” creates material distortions in our conclusions. Namely, we overestimate the base odds of success because we only read about successes. Fight back by focusing on base rates of success and recognizing the potential for silent evidence.

I hope you find this series as valuable as I do. I encourage you to work through it slowly—take the time to think deeply on each item. Your decision-making consistency and quality will improve as a result.

Where It Happens Podcast

Is Terra's Luna ($LUNA) Headed To The Moon? | Ndamukong Suh

Watch it on YouTube and listen to it on Apple Podcasts or Spotify. Want more? Join the 4,000+ in our unique community on Discord.

Special thanks to our sponsors for providing us with the support to bring this episode to life.

This episode is brought to you by Outer. Spring is in the air in both NYC and Miami, and we can’t get enough of our Outer outdoor furniture. Their furniture looks incredible and is durable. Personally, we love the Outdoor Loveseat.

Right now for our audience exclusively they are offering $300 off any product + free shipping until May 1. This is one of the most generous offers of any sponsor we’ve had. Did we mention they have a 2-week free trial? Go to liveouter.com/room to get the deal.

This episode is also brought to you by LEX. We’re always looking for breakthrough businesses. And, our audience has repeatedly asked how they can invest in commercial real estate. Lex is the easy answer. LEX turns individual buildings into public stocks via IPO, so you can invest, trade, and manage your own portfolio of high-quality commercial real estate. It’s so simple to get started today.****

Sign up for free at lex-markets.com/room and get a $50 bonus exclusively for our audience when you deposit at least $500. It’s a no-brainer.

Sahil’s Hiring Zone

Featured Opportunities

DaoHq - Founding Solidity Developer

Artifact - Product Designer

Practice - Product Designer

Clay - Growth Manager

Assemble - Software Engineer

Elevate Labs - Senior Growth Marketing Manager

Pallet - BD & Sales, GTM & Ops—Creator Growth

Skio - Account Executive

SparkAI - Full Stack Engineer

The full board with 30+ other roles can be found here!

Talent Collective

Excited to announce the launch of my new talent collective! Members of the collective will get exclusive access to opportunities at my favorite high-growth startups across the tech landscape.

Completely free for candidates. Use the link here to apply!

Companies can get exclusive access to these terrific candidates by buying a collective pass here.